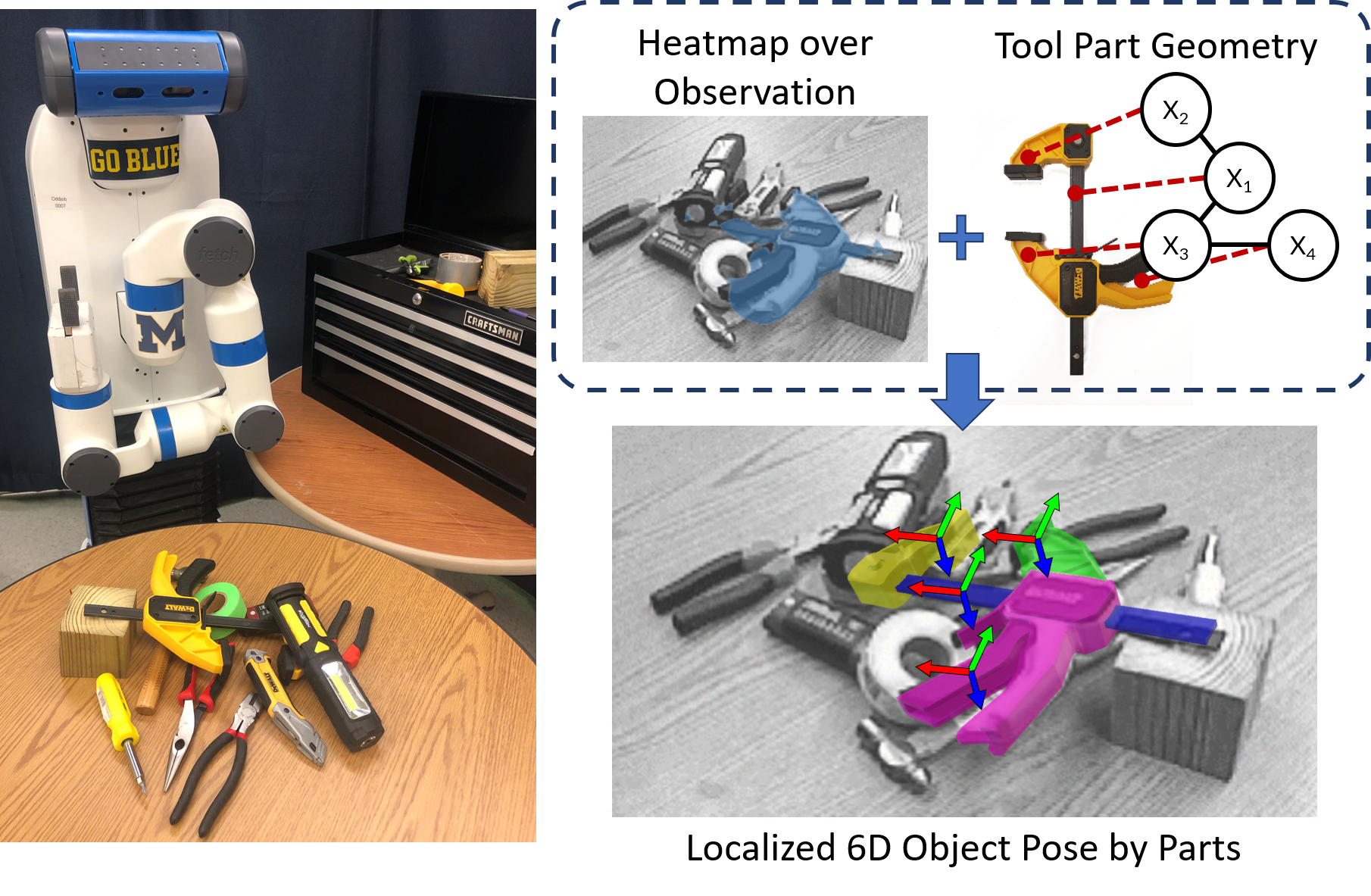

Parts-Based Articulated Object Localization in Clutter Using Belief Propagation

Jana Pavlasek, Stanley Lewis, Karthik Desingh, Odest Chadwicke Jenkins

Robots working in human environments must be able to perceive and act on challenging objects with articulations, such as a pile of tools. Articulated objects increase the dimensionality of the pose estimation problem, and partial observations under clutter create additional challenges. To address this problem, we present a generative-discriminative parts-based recognition and localization framework for articulated objects in clutter. We formulate the problem of articulated object pose estimation as a Markov Random Field (MRF). Hidden nodes in this MRF express the pose of the parts, and edges express the articulation constraints between parts. Localization is performed within the MRF using an efficient belief propagation method. The method is informed by both part segmentation heatmaps over the observation, provided by a neural network, and the articulation constraints between object parts. Our generative-discriminative approach allows the proposed method to function in cluttered environments by inferring the pose of occluded parts using hypotheses from the visible parts. We demonstrate the efficacy of our methods in a tabletop environment for recognizing and localizing hand tools in uncluttered and cluttered configurations.