NeRF-Frenemy

Co-Opting Adversarial Learning for Autonomy-Directed Co-Design

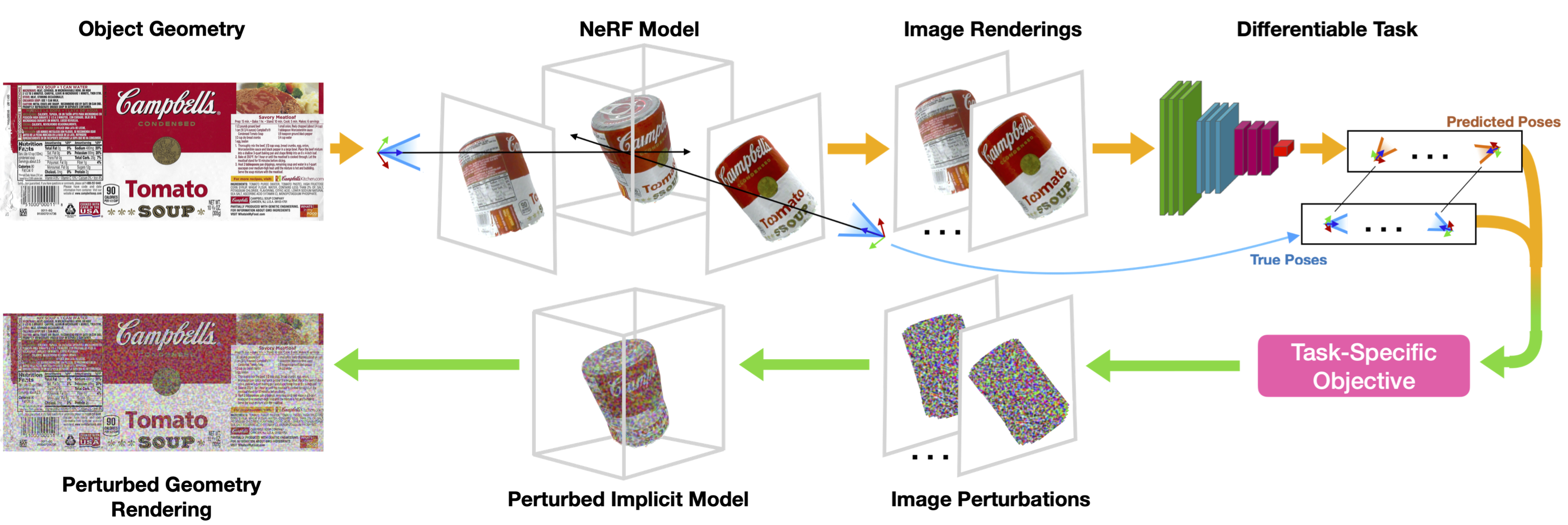

As the presence of robotics in industries such as warehousing and manufacturing grows, the need arises to optimize product design for downstream autonomous tasks. For example, when considering object segmentation or pose regression, minimizing featureless or symmetric regions of an object can improve the quality of these estimations. Adding visual fiducial markers can provide landmarks for these tasks, however they can become warped on deformable packaging or distract from designed branding of an object. To address this gap, our proposed framework, NeRF-Frenemy, incorporates techniques introduced by the adversarial machine learning community, but in a cooperative manner to improve the fidelity of manipulation-focused perception tasks. NeRF-Frenemy optimizes a neural radiance field (NeRF) representation of an object against a given pre-trained perception model by seeking a minimal perturbation to the implicit space. The resulting changes in the objects' appearance from these alterations to the implicit space can be realized to a modified object appearance which will improve the given model's performance on the object. In this work, we show an initial result of this approach on a member of the YCB Dataset against the image segmentation portion of the PoseCNN model.

Citation

@article{lewis2022nerfrenemy,

author = {Lewis, Stanley and Aldeeb, Bahaa and Opipari, Anthony and Olson, Elizabeth and Kisailus, Cameron and Jenkins, Odest Chadwicke},

title = {NeRF-Frenemy: Co-Opting Adversarial Learning for Autonomy-Directed Co-Design},

year = {2022},

journal={RSS Workshop-Implicit Representations for Robotic Manipulation}

}