NARF22: Neural Articulated Radiance Fields for Configuration-Aware Rendering

Stanley Lewis

University of Michigan

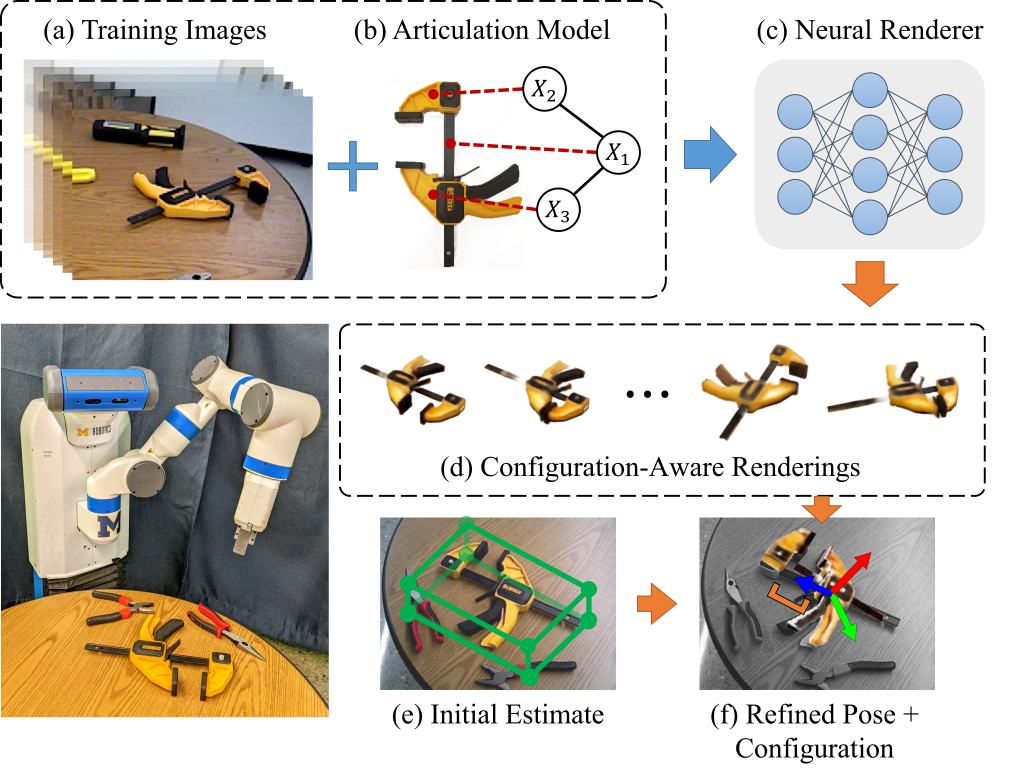

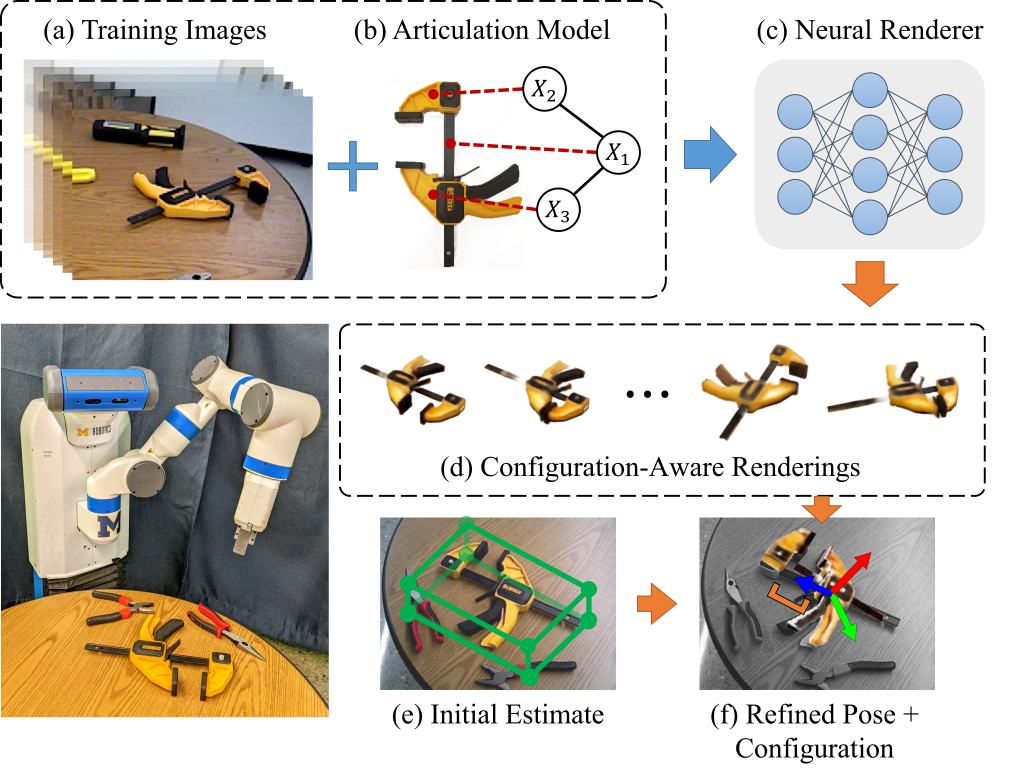

Articulated objects pose a unique challenge for robotic perception and manipulation. Their increased number of degrees-of-freedom makes tasks such as localization computationally difficult, while also making the process of real-world dataset collection unscalable. With the aim of addressing these scalability issues, we propose Neural Articulated Radiance Fields (NARF22), a pipeline which uses a fully-differentiable, configuration-parameterized Neural Radiance Field (NeRF) as a means of providing high quality renderings of articulated objects. NARF22 requires no explicit knowledge of the object structure at inference time. We propose a two-stage parts-based training mechanism which allows the object rendering models to generalize well across the configuration space even if the underlying training data has as few as one configuration represented. We demonstrate the efficacy of NARF22 by training configurable renderers on a real-world articulated tool dataset collected via a Fetch mobile manipulation robot. We show the applicability of the model to gradient-based inference methods through a configuration estimation and 6 degree-of-freedom pose refinement task.

Configuration-Aware Renderings

NARF is trainined using labelled training images containing object poses, masks, and ground truth configurations.

The articulation model of the object is required at training time.

At testing time, NARF can render images of articulated objects at arbitrary poses and articulations.

The following are examples of NARF configuration-aware renderings of articulated objects from the Progress Tools dataset.

These models were trained with only a small number of example configurations in the training data.

Pose & Configuration Refinement

The NARF rendering pipeline is fully differentiable.

We perform 6 DoF pose and configuration refinement by computing Mean Squared Error loss between the rendering at an initial hypothesis and the observation image.

The initial pose and configuration is then refined iteratively through Stochastic Gradient Descent using the gradient of the MSE loss.

In the examples below, the 6 DoF pose of the clamp is initialized to an estimate given by an external module.

The configuration of the clamp is initialized randomly within its articulation constraints.

We are able to recover the configuration and refine the 6 DoF pose using SGD over the gradients of the NARF model.

@inproceedings{lewis2022narf,

author = {Lewis, Stanley and Pavlasek, Jana and Jenkins, Odest Chadwicke},

title = {{NARF22}: Neural Articulated Radiance Fields for Configuration-Aware Rendering},

year = {2022},

booktitle = {International Conference on Intelligent Robots and Systems ({IROS})},

organization = {IEEE}

}